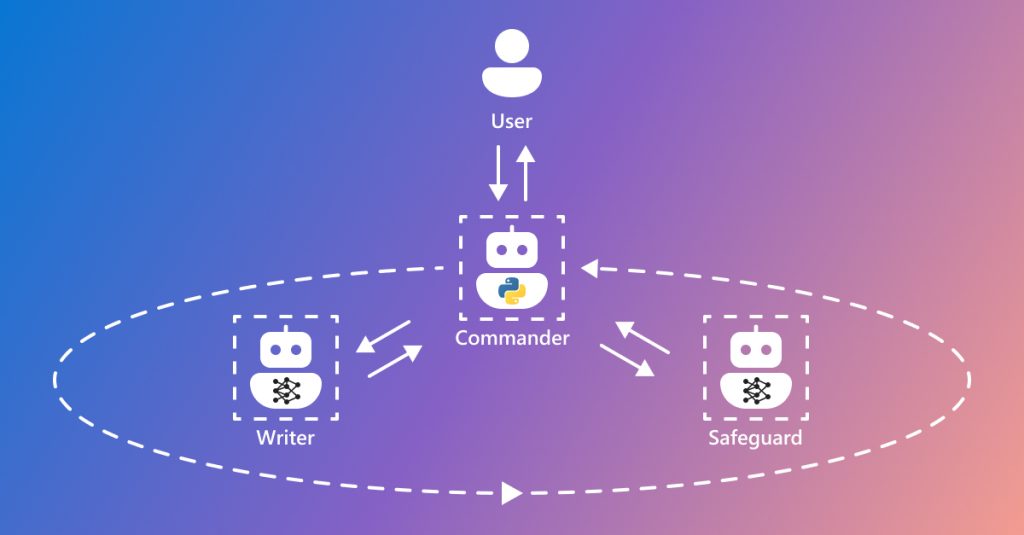

There’s been a lot of buzz recently around agentic AI tools like Microsoft’s Autogen and CrewAI.

Could they be used in a technical documentation production workflow setting for automating some of the work carried out when creating user documentation for software?

Image: Microsoft

What are agentic AI tools?

The core idea behind agentic AI systems is that you can create a network of specialised software agents, each with their own skills and objectives, that can work together collaboratively to tackle complex tasks. The claim for these tools is that they can automate and streamline complex tasks.

One example is a stock analysis tool that comprises a research analyst agent, financial analyst agent, and investment advisor agent, who work collaboratively to gather information about a stock, analyse its financial situation, and advise on whether it’s a good investment or not. These AI agents can communicate with each other, share information, and coordinate their efforts to provide a holistic assessment.

In the technical documentation context, that might be document creation, ensuring accuracy, and maintaining up-to-date information.

Defining the roles of the AI agents

In an agentic AI system, you need to define the agent roles. Each agent in the workflow should have a specific role, mirroring the specialties needed in creating technical documentation.

The agent roles might be:

- A content gathering agent

- This agent gathers all the relevant information and content needed for the documentation. For example, it might search the codebase, design documents, engineering specs, technical specifications, updates from software development teams, repositories, databases, and any other relevant sources.

- It’s also responsible for keeping the documentation aligned with the latest product versions.

- An information designer agent

- This agent takes the raw content from the content gathering agent and organises it into a logical, structured outline for the documentation. This could involve things like identifying key topics, sub-topics, dependencies, etc.

- A technical writer agent

- This agent drafts the content, using the structured outline as a guide. It takes information from the content gathering agent and transforms it into user guides, release notes, and help articles.

- An editorial reviewer agent

- This agent ensures the accuracy, clarity, and quality of the content.

- It reviews the drafts to enforce style guides and correct grammatical errors.

- It can provide feedback and edits back to the technical writer agent.

- An integration testing agent

- This agent tests links, code snippets, and commands in the documentation to ensure they work as described, mimicking the role of a QA tester for documentation.

- A formatting agent

- This agent handles the visual design and layout of the documentation, ensuring it meets brand guidelines and is easy to read and navigate.

- A publishing agent

- This agent packages the finalised content and metadata, and publishes the documentation to the appropriate channels (web, PDF, etc).

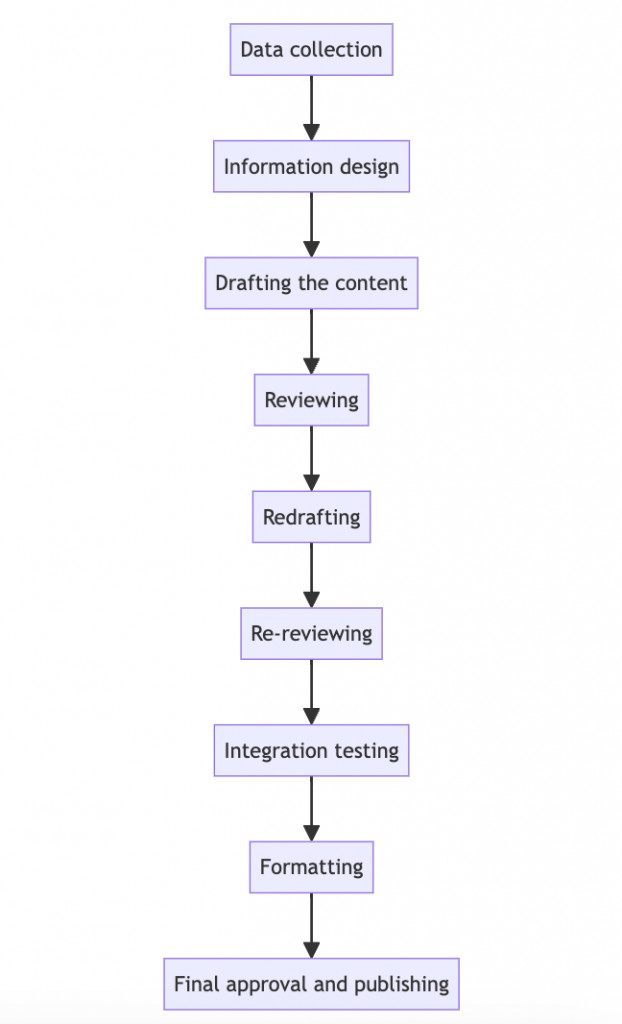

Designing the workflow

In the workflow, the different agents would be communicating and coordinating with each other, drawing on each other’s specialised skills and knowledge to iteratively improve the end product. The workflow would involve several stages of interaction among the agents.

In the Write the Docs forum, Mattias Sander recommended that when creating a workflow, you need to break down each task, potentially in the smallest possible steps, and design the process in such a way that each step is more or less independent of other steps. Essentially, you’d have one agent for every small little step of your process. He said this approach reduces likelihood of the system running out of context memory, and it might reduce the cost of the tokens it uses (tokens are the basic unit that chatbot models use for calculating the length of a text and charging for the use of the model).

We asked ChatGPT and Claude to suggest a workflow. They suggested the following:

- Data collection: The content gathering agent searches the relevant sources and compiles all the necessary information about the software updates or new features.

- Information design: The information designer agent takes that raw content and structures it into an outline for the documentation set.

- Drafting: The collected data is handed off to the technical writer agent, which generates the draft documentation text.

- Reviewing: The drafted documents are passed to the editorial reviewer agent for proofreading and quality assurance. The editorial reviewer agent also provides feedback/edits back to the technical writer agent.

- Redrafting: The technical writer agent incorporates the editorial reviewer agent changes.

- Re-reviewing: The revised documents are passed to the editorial reviewer agent for proofreading, quality assurance, and approval.

- Integration testing: Simultaneously, the integration testing agent checks all technical elements in the documents to ensure they are correct and functional.

- Formatting: The formatting agent lays out the finalised content according to design guidelines.

- Final approval and publishing: After revisions and testing, the document is ready for final approval. The publishing agent packages and publishes the completed documentation.

Implementing continuous improvement

Machine learning models underpinning these agents can continuously learn from feedback loops integrated into the workflow.

For example, user feedback and usage analytics can inform the content gathering agent about which sections of the documentation are accessed most frequently or which generate confusion, leading to targeted updates.

Potential benefits

Implementing an agentic AI system in technical documentation workflows could significantly reduce manual effort, enhance document accuracy, and keep documentation closely aligned with software changes, providing a seamless experience for end users.

Some key benefits we could see with this approach are:

- Scalability: The agents could work in parallel on different parts of the documentation, increasing throughput.

- Consistency: The agents would adhere to defined guidelines and best practices, leading to more consistent documentation.

- Efficiency: Automating many of the repetitive, manual tasks could significantly streamline the documentation workflow.

- Continuous Improvement: The agents could learn and refine their processes over time, getting better at their roles.

Limitations and potential downsides

However, there are limitations.

Defining the correct agents and workflow

There are some weaknesses in the workflow we outlined earlier:

- There doesn’t seem to be an agent and workflow for checking whether the information is accurate from a technical perspective.

- This might require a human to do this.

- For humans, it’s important to know about mistakes early on, as they’ll spend less time fixing the problem sooner than later. For an AI system, it might matter less when accuracy checks are carried out, as the wasted time might be negligible.

- There doesn’t seem to be a way to incorporate variable text, snippets of existing text, or conditional text.

- There doesn’t seem to be a way to incorporate metadata, apart from at a document level.

Time and cost to develop

Implementing something like this would require a significant upfront investment in training and integrating the various AI agents. Given the complexity of technical writing, there is a risk you end up trying to create a Help Authoring tool.

The need for programming skills

With most of the agentic AI development tools, you need to write a Python application to create your system. In practice, you can edit an existing template to suit your needs, but this does create some obstacles for people who are not programmers.

Integrating with existing systems

The AI agents would need to be integrated with existing tools and platforms used by software teams, such as GitHub for development updates, Jira for tracking issues, and content management systems for hosting documentation.

Context memory limits

Mattias Sander also commented:

I’ve found that I quickly run out of tokens, and I haven’t figure out how to best structure the prompts to have the agents write the text in line with the style guide I have.

Transparency

There’s a need for transparency in how the AI agents function and make decisions, particularly when errors occur.

Alternatives

The alternative might be to keep using authoring tools (like Flare/Madbot; DocsHound; Heretto etc.) that have the AI tools baked into them. You’d probably lose the functionality of different agents communicating and coordinating tasks with each other. But you might get 90% of the benefits of AI, without all the time and cost associated with developing an Agentic AI system.

Another would be to use a tool like Zapier Central, which can create a chain of AI bots that work across a range of popular apps.

It depends partly on whether the technical writing process consistent and predictable enough for an AI agent to manage the process. Or whether it requires a human to deal with all messiness of a real-world documentation workflow.

Summary

Multiple AI agents might provide benefits in terms of increased productivity, quality, and consistency for technical documentation teams. However, at the moment, there are some significant downsides and limitations. You would need to have a human in the loop to guide the agents.

The Technical Writer’s ability to integrate different aspects of a process and assist the AI system in focusing on the right things still seems to be needed, and relevant. In practice, that might result in people continuing to use Help authoring tools, albeit with AI capabilities embedded into them.

More information

A small plug for our Using Generative AI in technical writing training course.

Leave a Reply